Language detection is a natural language processing task where we need to identify the language of a text or document. Using machine learning for language identification was a difficult task a few years ago because there was not a lot of data on languages, but with the availability of data with ease, several powerful machine learning models are already available for language identification. So, if you want to learn how to train a machine learning model for language detection, then this article is for you. In this article, we will walk you through the task of language detection with machine learning using Python.

Language Detection using Python

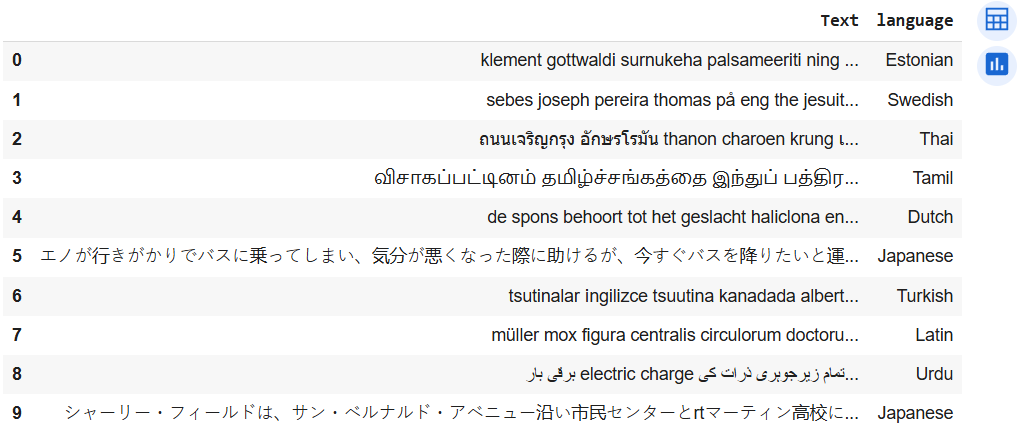

The dataset we are using is sourced from Kaggle. It contains data on 22 popular languages, with 1,000 sentences for each language, making it well-suited for training a language detection model with machine learning. In the section below, we will walk you through the process of training a language detection model using Python.

We’ll begin the task of language detection with machine learning by importing the required Python libraries and loading the dataset:

from google.colab import drive

drive.mount('/content/drive')

import pandas as pd

import numpy as np

from sklearn.feature_extraction.text import CountVectorizer

from sklearn.model_selection import train_test_split

from sklearn.naive_bayes import MultinomialNB

file_id="1gmFrPjcqqGzzqRVfasq4rJXitAbDe90y"

url=f"https://drive.google.com/uc?id={file_id}"

data=pd.read_csv(url)

data.head(10)

Now, let’s check whether this dataset contains any null values:

data.isnull().sum()

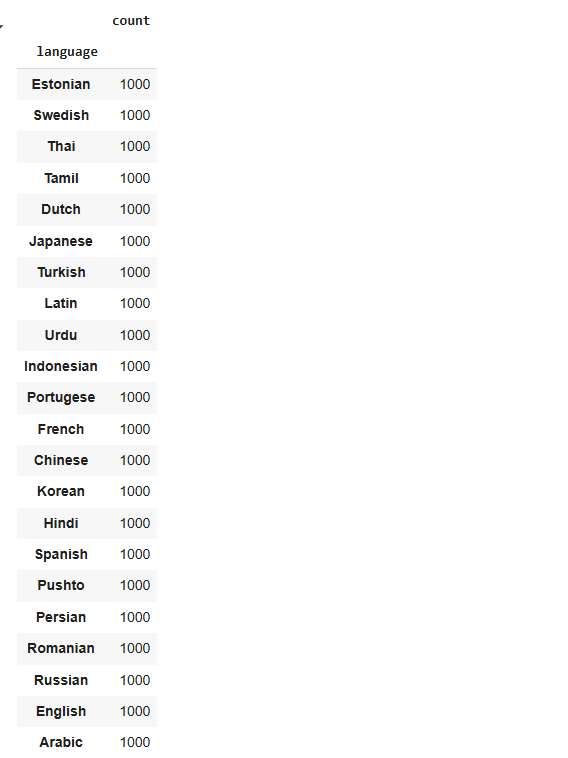

Now, let’s examine all the languages included in this dataset:

data["language"].value_counts()

This dataset consists of 22 languages, each represented by 1,000 sentences. It is well-balanced and contains no missing values, so we can conclude that it is fully prepared for training a machine learning model.

Language Detection Model

Now, we will split the dataset into training and test sets:

x=np.array(data['Text'])

y=np.array(data['language'])

cv=CountVectorizer()

X=cv.fit_transform(x)

X_train, X_test, y_train, y_test=train_test_split(X,y,test_size=0.33,random_state=42)Since this is a multiclass classification problem, we will use the Multinomial Naïve Bayes algorithm to train the language detection model, as it is known to perform very well on tasks involving multiple classes.

model=MultinomialNB()

model.fit(X_train,y_train)

model.score(X_test,y_test)

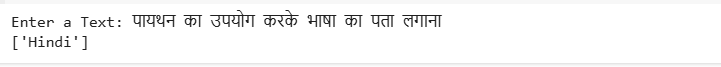

Now, we will use this model to detect the language of a text by taking input from the user:

user=input("Enter a Text: ")

data=cv.transform([user]).toarray()

output=model.predict(data)

print(output)

As we can see, the model performs well. However, it is important to note that this model can only detect the languages included in the dataset.

Conclusion

In this tutorial, we demonstrated how to build a language detection model using Python and machine learning. Leveraging a balanced dataset of 22 languages, with 1,000 sentences each, we ensured our model had a strong foundation and no missing values. By transforming text data with CountVectorizer and splitting it into training and testing sets, we prepared the pipeline for effective classification. Given the problem’s multiclass nature, we chose the Multinomial Naïve Bayes algorithm due to its proven effectiveness in similar tasks. After training, we saw solid performance when detecting the language of user-provided text, though it’s important to remember that the model is limited to the languages present in the dataset.

Also, if you’re interested in seeing how similar machine learning pipelines can be applied to very different prediction tasks — check out Breast Cancer Survival Prediction for another project example.